A step by step guide

Create a REST API using Node.js for remote access of DuckDB. This makes it very easy to embed DuckDB in other applications, such as FileMaker.

With the included demo files (refer to bottom of this post) you can create a running test environment on your local machine within a few minutes. It is of course also possible to install the service on a separate machine and access it via HTTP request. This can be useful to easily integrate DuckDB into an existing production environment as a microservice.

All the necessary steps are explained in the following instructions.

1) Installation of node.js

Download Prebuilt Installer from the official site:

https://nodejs.org/en/download/prebuilt-installer

Run installer on your machine.

After node.js was installed – check whether the application responds inside your Terminal.

Query version:

node -v

2) Install demo file for API:

Download zip-archive with the demo files.

Create a folder “nodejs” in the user directory and copy the folder “duckdb-rest-api” into it.

Now change to the directory with the demo file:

cd /Users/yourname/nodejs/duckdb-rest-api/

Please put your user name in the colored position!

Install and query the current version of nmp (this step is optional because npm is usually installed by the nodejs installer):

npm install npm

npm -v

The “node_modules” folder is now updated.

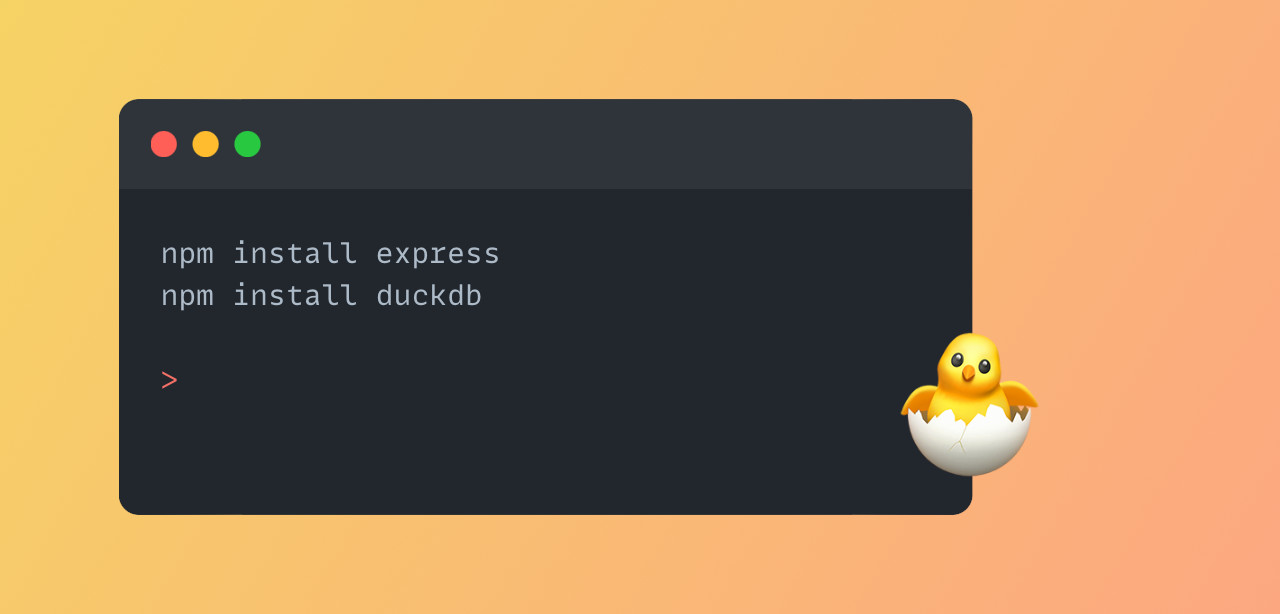

3) Install the required modules

These are already present in the supplied “node_modules” folder, they can be loaded in this way for a fresh installation:

npm install express

npm install duckdb

4) Start the API script

Change to the “duckdb-rest-api” folder (if not already done with the above steps) and run the script file:

node duck.js

node is the call to the Node.js engine

duck.js as parameter is the name of the API script file

A message should now appear in the terminal indicating that the API script is running and the local web service has been started:

Server Listening on PORT: 3000

5) Test query of the API in the browser (optional)

{“Status”: “Running”}

6) Open FileMaker file

Locate the provided demo file DuckDB API.fmp12 and open it with FileMaker Pro. You will find some basic examples to explore the possibilities of DuckDB.

You might study the included scripts and techniques to understand how it works. The file is unlocked and provides more information inside the ‘Read me’ script.

The FileMaker file communicates with the API via the contained script and sends the entered queries via ‘insert from URL‘ to the address ‘http://127.0.0.1:3000/‘. The responses are decoded accordingly and displayed in the given result field.

That’s it!

You are now able to run the demo requests and start your own experiments.

If you are not using the FileMaker demo file but want to integrate DuckDB into your own environment via the REST API, you can do this by simply sending POST requests to the specified local host URL on port 3000.

Have a look at the duck.js script file where you can find basic documentation about the supported request scheme.

It might be helpful to send your requests with Postman or a similar tool to understand the structure of the API communication via JSON messages. And to send your own SQL queries to the DuckDB engine.

If you want to deploy the setup on a separate computer or VM, it makes sense to create an autostart script for the REST API. Please refer to the following plist file to make it work via LaunchAgent on MacOS. For other platforms, a similar setup is required in the appropriate way for the respective operating system.

Same is applicable if you want to autostart the script on your local machine.

7) Set up LaunchAgent for Autostart

mkdir -p ~/Library/LaunchAgents nano ~/Library/LaunchAgents/com.username.nodescript.plist

This creates an empty plist file in the users Library folder with the name ‘com.username.nodescript.plist’ and opens the empty file with nano editor.

Now you can paste in and modify the following XML snippet:

<?xml version="1.0" encoding="UTF-8"?> <!DOCTYPE plist PUBLIC "-//Apple//DTD PLIST 1.0//EN" "http://www.apple.com/DTDs/PropertyList-1.0.dtd"> <plist version="1.0"> <dict> <key>Label</key> <string>com.username.nodescript</string> <key>ProgramArguments</key> <array> <string>/usr/local/bin/node</string> <string>/Users/yourname/nodejs/duckdb-rest-api/duck.js</string> </array> <key>WorkingDirectory</key> <string>/Users/yourname/nodejs/duckdb-rest-api/</string> <key>RunAtLoad</key> <true/> <key>KeepAlive</key> <true/> </dict> </plist>

Make sure to put your user name in the colored positions! The LaunchAgent config will not work if you only enter a shorthand for the user directory such as ‘~/nodejs/duckdb-rest-api/‘.

If the plist file with the contained xml snippet is present, MacOS starts the nodejs script file automatically on reboot and ensures that the working directory for the script is set correctly.

The script is executed in the background without a visible terminal window. You can check if the API is available by looking in your browser – see step 5) above.

Start Launch Agent manually:

launchctl load ~/Library/LaunchAgents/com.username.nodescript.plist

Stop Launch Agent manually:

launchctl unload ~/Library/LaunchAgents/com.username.nodescript.plist

Demo files

Demo files (FileMaker and node.js) are available for download here:

DuckDB_Demofiles.zip (PDF 29,8 MB)

Further reading:

- Integrating DuckDB with FileMaker

- How to Install Node.js and NPM on Windows and Mac?

- Discover DuckDB’s feature set in the documentation